Polars confusion

Setup

Let us first generate some dummy data, which consists of labels (y_true) and

model scores (y_prob) with values in [0,1]. Furthermore we generate grouping

variables id_1 and id_2, which will later be used to partition our data.

|

|

shape: (5, 4)

┌──────┬──────┬────────┬──────────┐

│ id_1 ┆ id_2 ┆ y_true ┆ y_prob │

│ --- ┆ --- ┆ --- ┆ --- │

│ i32 ┆ i32 ┆ bool ┆ f32 │

╞══════╪══════╪════════╪══════════╡

│ 13 ┆ 2 ┆ true ┆ 0.827355 │

│ 6 ┆ 0 ┆ false ┆ 0.727951 │

│ 13 ┆ 7 ┆ false ┆ 0.26048 │

│ 2 ┆ 3 ┆ false ┆ 0.911763 │

│ 7 ┆ 2 ┆ true ┆ 0.260757 │

└──────┴──────┴────────┴──────────┘

Problem description

Metrics like precision, recall or the f1-score are defined entirely in terms of the confusion (i.e. the number true positives, false positives, true negatives and false negatives). These in turn are defined in terms of a threshold which defines the boolean predictions, being positive if and only if the score is greater than or equal to the threshold.

Thus we can ask ourselves: Which threshold can we use to optimize for example the f1-score ?

The most naive approach is to compute all values of the f1-score for a large enough number of thresholds to find some theta for which the f1-score is maximal.

The next question could be: Which thresholds can we use to optimize the f1-score on certain partitions of our data ?

We will answer both questions at once since the first question is a special case (with a one element partition).

Working with wide dataframes

An elegant solution to the problem is to use wide dataframes.

For each theta, we generate a boolean column y_pred(theta) and four columns

defining the confusion with respect to that theta. Furthermore, we add

one more column with our metric in question, lets say the f1-score. In total

we get 6*len(theta) extra columns.

Now, the whole magic of why polars is such a great choice to solve this problem is that all expression will be calculated in parallel, and in addition, we can easily group our dataframe by our id (grouping) variables that define the partition.

|

|

|

|

DataFrame with confusion and boolean predictions:

shape: (5, 14)

┌──────┬──────┬────────┬──────────┬───┬───────┬───────┬───────┬───────┐

│ id_1 ┆ id_2 ┆ y_true ┆ y_prob ┆ … ┆ tn_0 ┆ tn_1 ┆ fn_0 ┆ fn_1 │

│ --- ┆ --- ┆ --- ┆ --- ┆ ┆ --- ┆ --- ┆ --- ┆ --- │

│ i32 ┆ i32 ┆ bool ┆ f32 ┆ ┆ bool ┆ bool ┆ bool ┆ bool │

╞══════╪══════╪════════╪══════════╪═══╪═══════╪═══════╪═══════╪═══════╡

│ 5 ┆ 6 ┆ false ┆ 0.771075 ┆ … ┆ false ┆ false ┆ false ┆ false │

│ 13 ┆ 8 ┆ true ┆ 0.566425 ┆ … ┆ false ┆ false ┆ false ┆ false │

│ 0 ┆ 6 ┆ true ┆ 0.306617 ┆ … ┆ false ┆ false ┆ false ┆ true │

│ 13 ┆ 7 ┆ false ┆ 0.977795 ┆ … ┆ false ┆ false ┆ false ┆ false │

│ 6 ┆ 0 ┆ true ┆ 0.88869 ┆ … ┆ false ┆ false ┆ false ┆ false │

└──────┴──────┴────────┴──────────┴───┴───────┴───────┴───────┴───────┘

f1-scores for different thresholds and groups:

shape: (5, 3)

┌──────┬────────────┬────────────┐

│ id_1 ┆ f1_score_0 ┆ f1_score_1 │

│ --- ┆ --- ┆ --- │

│ i32 ┆ f64 ┆ f64 │

╞══════╪════════════╪════════════╡

│ 8 ┆ 0.769231 ┆ 0.444444 │

│ 0 ┆ 0.8 ┆ 0.545455 │

│ 7 ┆ 0.75 ┆ 0.571429 │

│ 3 ┆ 0.6 ┆ 0.5 │

│ 10 ┆ 0.444444 ┆ 0.285714 │

└──────┴────────────┴────────────┘

Optimal thresholds and f1-scores:

shape: (5, 4)

┌──────┬───────────────┬───────────┬──────────┐

│ id_1 ┆ theta_opt_ind ┆ theta_opt ┆ f1_opt │

│ --- ┆ --- ┆ --- ┆ --- │

│ i32 ┆ u32 ┆ f32 ┆ f64 │

╞══════╪═══════════════╪═══════════╪══════════╡

│ 8 ┆ 0 ┆ 0.1 ┆ 0.769231 │

│ 0 ┆ 0 ┆ 0.1 ┆ 0.8 │

│ 7 ┆ 0 ┆ 0.1 ┆ 0.75 │

│ 3 ┆ 0 ┆ 0.1 ┆ 0.6 │

│ 10 ┆ 0 ┆ 0.1 ┆ 0.444444 │

└──────┴───────────────┴───────────┴──────────┘

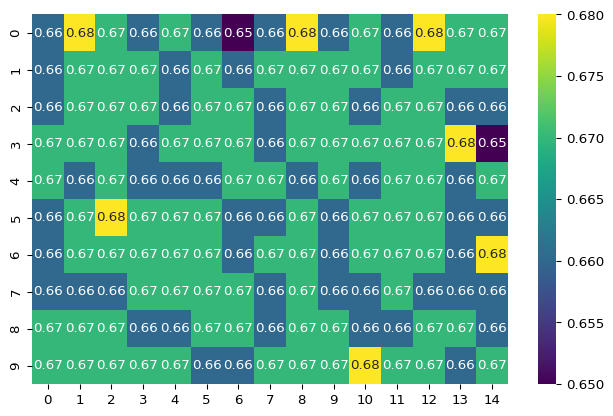

More data

Now, lets see if we can handle more data. Note that the output is generated on a single core machine and hence the parallelization is not any help here, however I think that the result is still quite impressive. PS: I tried to implement the same logic in pandas, however the performance was not even close to the one of polars and I got a lot of warnings and errors about too many columns. Feel free to benchmark this on your own or any other machine. On my 8 core 16 GB machine, the following code runs in approximately one second.

|

|

CPU: 1

Memory: 769.046875 MB

|

|

|

|

shape: (5, 4)

┌──────┬──────┬───────────┬──────────┐

│ id_1 ┆ id_2 ┆ theta_opt ┆ f1_opt │

│ --- ┆ --- ┆ --- ┆ --- │

│ i32 ┆ i32 ┆ f32 ┆ f64 │

╞══════╪══════╪═══════════╪══════════╡

│ 4 ┆ 6 ┆ 0.0 ┆ 0.67379 │

│ 10 ┆ 4 ┆ 0.0 ┆ 0.663541 │

│ 4 ┆ 5 ┆ 0.0 ┆ 0.670363 │

│ 8 ┆ 7 ┆ 0.0 ┆ 0.671941 │

│ 10 ┆ 5 ┆ 0.0 ┆ 0.671487 │

└──────┴──────┴───────────┴──────────┘

CPU times: user 919 ms, sys: 44.3 ms, total: 963 ms

Wall time: 981 ms

|

|

Feel free to experiment with the code or to build a benchmark using different techniques and tools.